I know this name for a long time. But never learnt it. Now it’s time.

Recap

Previously, Karatsuba’s algorithm for integer multiplication. It computes the result in $O(n^{\log_2 3})$ where $n$ is the number of digits.

Another recap: polynomial representation:

$$ p(x) = \sum_{i = 0}^{n}p_ix^i $$And we can represent this polynomial using an array of size $n$.

Evaluations and additions of polynomials are straightforward computation. Can be done in $O(n)$ time. Naive multiplication is slow, however. It takes $O(n^2)$ time to compute all the coefficient multiplications. FFT helps us speed up this multiplication.

Other representations of polynomials

Sample points!

$n$ degree polynomial takes $n+1$ points to identify. An easy, interesting, but super useful algorithm in crypto: Shamir Secret Sharing.

Multiplication

$O(n)$ time to multiply points element wise. However, since degree grows to $O(2n)$, we need $2n + 1$ samples for the result.

Addition

$O(n)$ time.

Evaluations (the catch)

$O(n^2)$ time.

$$ p(x) = \sum_{j = 0}^{n-1} \left( \frac{y_j}{ \prod_{k \neq j} (x_j - x_k) } \prod_{k \neq j} (x - x_k) \right) $$Roots

$$ p(x) = c(x-r_1) \cdots (x - r_n) $$Multiplication

$O(n)$ time to multiply everything.

Addition

Complicated. Long time.

Evaluation

Simply plug in and compute in $O(n)$ integer multiplications.

Combination?

We want to combine advantages of the coefficient representation and the sampling representation. Suppose we are given the following polynomial:

$$ p(x) = \sum_{i = 0}^{n - 1}a_i x^i $$And sample points: $(x_0, \ldots, x_{n-1}),(y_0, \ldots, y_{n-1})$.

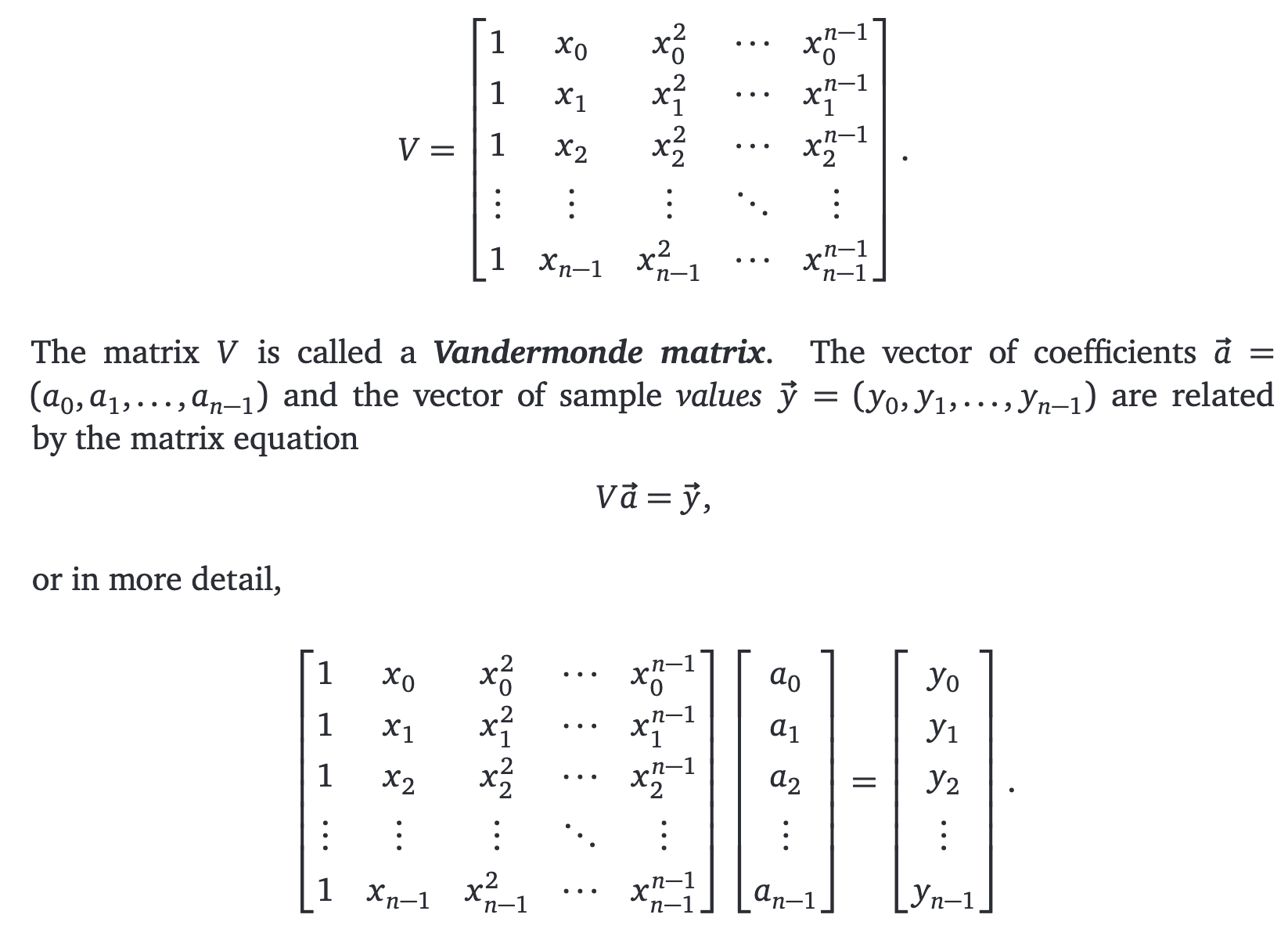

We can convert from coefficients to samples by using Vandermonde matrix:

This is like evaluating the polynomial $n$ times. It takes $O(n^2)$ time. However, can we choose the sample points carefully so that we can make this faster?

Recall: divide and conqure in integer multiplications

huh? They look like polynomials.

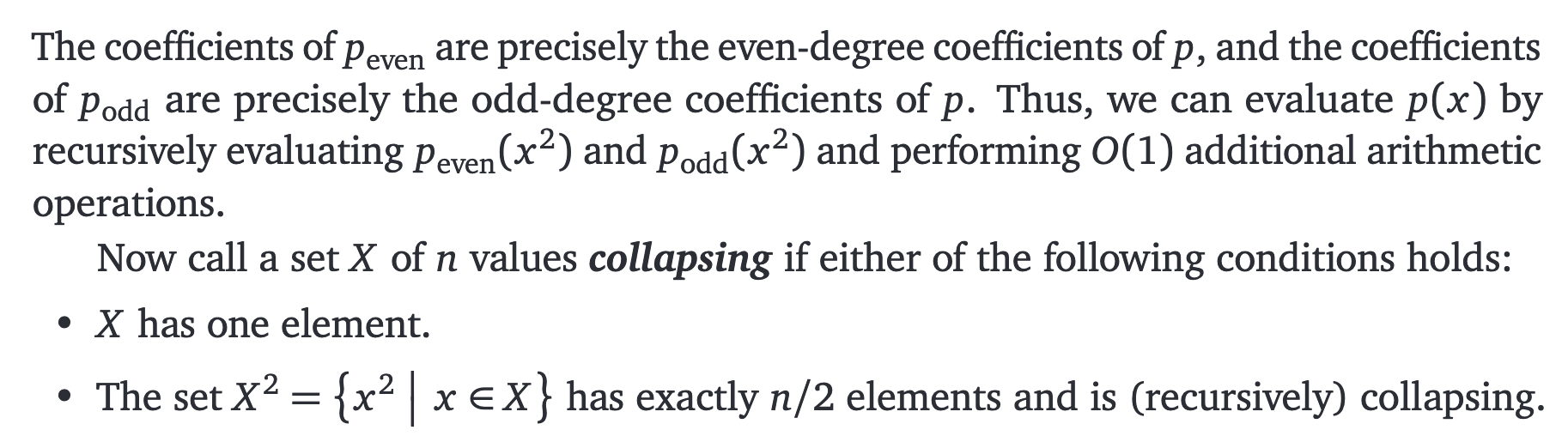

In polynomials?

“The size of a collapsing set must be a power of 2.”

If $T(n)$ = evaluating a degree $n-1$ polynomial on a collapisble set of $n$ sample points,

$$ T(n) = 2T\left(\frac{n}{2}\right) + O(n) $$Constructing a huge collapsible set

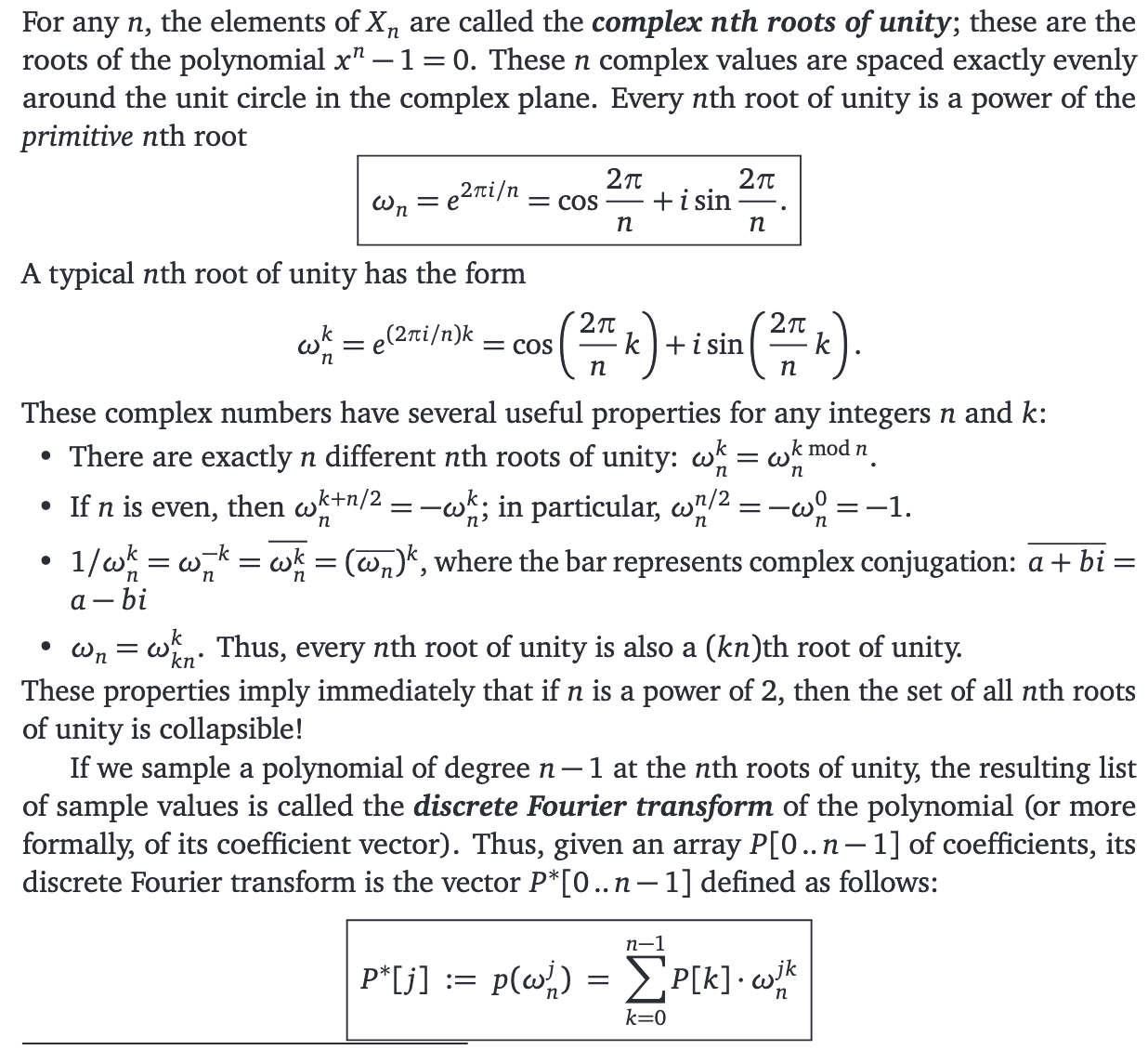

$$ \set{1} \to \set{1, -1} \to \set{i, -i, 1, -1} \to \cdots $$Reapeating $k$ times gives us a collapsible set of size $2^k$. This is called $2^k$ complex roots of unity.

We want to go back from samples to coeff:

Previously we had $\vec{y} = V \cdot \vec{a}$. To get back, we multiply the inverse of $V$ on both sides. This multiplication can also be solved using DFT (only minor changes).

Now, polynomial multiplication

High-level: for the input we have two polynomials $P, Q$. We run FFT to get $P^\*, Q^\*$, which are in sample form. We do multiplication on these two samples in $O(n)$ time, getting $R^\*$. Finally, we compute inverse FFT and return.

Other use cases

- Convolution of sequences. Almost exactly the same as polynomials. But we only want the coefficients.

Reference:

https://courses.grainger.illinois.edu/cs473/fa2024/notes/A-fft.pdf